EmptyBottle

🔑 LTO tape exists

- Apr 10, 2025

- 749

Answering a mean-spirited sounding message, but in a calm, logical manner. The majority (3 ppl) agreed with my initial post, but the thread was locked before I checked it again (and later unlocked but it isn't relevant to post there).

Even a general disagreement (eg: posting the screenshots of the other detectors and the limitations) would have been better than what actually got written by the other user.

sanctioned-suicide.net

sanctioned-suicide.net

Quoted in Bulletin board style for better readability. This reply bears no ill will, and is saved to disk locally in case of unfair takedowns

> Sorry... but what exactly have you contributed to this discussion, other than publicly demonizing someone who consistently wrote clearly and brought meaningful data to the table?

I have stated why the story could be legitimate.

> Did your brain light you on the road to Damascus? If your brain were of any use, you would have produced something useful instead of investigating Pyrrhic discoveries

[This surprising statement needs no reply]

> Do you really feel good about yourself after posting screenshots of an AI detector in an attempt to discredit someone? Honestly, aren't you even a little ashamed?

My intention wasn't to discredit the person, but to question the accuracy of that specific post, since that post itself appears to discredit someone. Maybe the Hmmph reaction was a bit strong, but not ashamed to point out AI use.

> Here's what I did: I took the exact same text and ran it through other detectors: QuillBot, Scribbr, ZeroGPT. Guess what? They all said the text was 100% human-authored.

>>Screenshot, one of the detectors actually said 12.7% AI<<

Not all the detectors said it was human, a screenshot showed 12.7%.

> And here's the ironic part: even GPTZero itself admits in its official documentation that it can produce false positives and has clear limits on reliability.

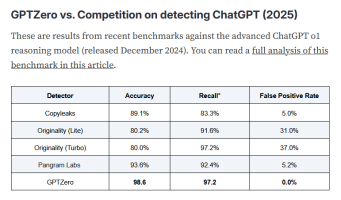

I am well aware of the limits to tools, that is why I also mentioned the phrase "moralistic fiction" that made me feel like it was AI. The phrase "language of trauma" is also suspicious. Also, https://gptzero.me/news/ai-accuracy-benchmarking/ says that GPTzero has the lowest error rate of the competition. That's why I used it. (image attached)

> But obviously you ignored this, because the content isn't your problem; the author's is. The problem isn't whether a text looks like it was written by an AI.

As I said before, the author isn't my problem, the content's accuracy is.

> The real problem is when someone, having nothing to say, appropriates a paltry percentage to orchestrate a public takedown.

I had something unique to say "I don't believe it was fake, yes they omitted information; mentioning they won't get into the gory details, but the interruption could have been at any point around unconsciousness.", and I wasn't aiming to orchestrate a public takedown either, just question the accuracy of the specific post.

> And honestly, I can't describe how petty it is. When arguments are lacking, pettiness is all that remains. I've attached screenshots: let everyone judge who's actually contributing and who's just being a cheap accuser.

It wasn't petty to point out AI use *after* addressing the post's content, and yet again, I didn't come to accuse.

Even a general disagreement (eg: posting the screenshots of the other detectors and the limitations) would have been better than what actually got written by the other user.

Worried about hanging myself tomorrow

I've gotten myself all prepared to hang myself tomorrow. I've got some good rope, a ladder and a good spot in my house to hang myself. I was just doing a little bit of extra research and came across a post on reddit where the poster talks about their very painful experience they had whilst...

sanctioned-suicide.net

sanctioned-suicide.net

Quoted in Bulletin board style for better readability. This reply bears no ill will, and is saved to disk locally in case of unfair takedowns

> Sorry... but what exactly have you contributed to this discussion, other than publicly demonizing someone who consistently wrote clearly and brought meaningful data to the table?

I have stated why the story could be legitimate.

> Did your brain light you on the road to Damascus? If your brain were of any use, you would have produced something useful instead of investigating Pyrrhic discoveries

[This surprising statement needs no reply]

> Do you really feel good about yourself after posting screenshots of an AI detector in an attempt to discredit someone? Honestly, aren't you even a little ashamed?

My intention wasn't to discredit the person, but to question the accuracy of that specific post, since that post itself appears to discredit someone. Maybe the Hmmph reaction was a bit strong, but not ashamed to point out AI use.

> Here's what I did: I took the exact same text and ran it through other detectors: QuillBot, Scribbr, ZeroGPT. Guess what? They all said the text was 100% human-authored.

>>Screenshot, one of the detectors actually said 12.7% AI<<

Not all the detectors said it was human, a screenshot showed 12.7%.

> And here's the ironic part: even GPTZero itself admits in its official documentation that it can produce false positives and has clear limits on reliability.

I am well aware of the limits to tools, that is why I also mentioned the phrase "moralistic fiction" that made me feel like it was AI. The phrase "language of trauma" is also suspicious. Also, https://gptzero.me/news/ai-accuracy-benchmarking/ says that GPTzero has the lowest error rate of the competition. That's why I used it. (image attached)

> But obviously you ignored this, because the content isn't your problem; the author's is. The problem isn't whether a text looks like it was written by an AI.

As I said before, the author isn't my problem, the content's accuracy is.

> The real problem is when someone, having nothing to say, appropriates a paltry percentage to orchestrate a public takedown.

I had something unique to say "I don't believe it was fake, yes they omitted information; mentioning they won't get into the gory details, but the interruption could have been at any point around unconsciousness.", and I wasn't aiming to orchestrate a public takedown either, just question the accuracy of the specific post.

> And honestly, I can't describe how petty it is. When arguments are lacking, pettiness is all that remains. I've attached screenshots: let everyone judge who's actually contributing and who's just being a cheap accuser.

It wasn't petty to point out AI use *after* addressing the post's content, and yet again, I didn't come to accuse.

Attachments

Last edited: